Data Center: Best Practices For Change Management

As system and network administrators our job is to keep everything as it is yet to keep up with the current technology. In case of an event, you will be in a position to have touched something which was not broken but at the same time you will be asked whether people will continue to live with Windows 2000 systems.

Inevitably the time will come to make changes in the data center: you will be upgrading existing hardware or deploying new hardware, upgrading existing software or deploying new, migrating physical machines to virtual infrastructure, upgrading network infrastructure, deploying new services etc.. These are technological issues that we undertake as part of our daily jobs. The formal definition of the change management process in the Information Technology Infrastructure Library (ITIL) concepts is more broad and strict than that I will discuss in this article. This is the point I will base my post: I will be discussing about my experiences in the data center rather than an overview of ITIL concepts.

Let me start with the obvious. Have redundancy and make the redundant components as identical as possible. With identical I mean the same operating system, same level of patches, same switch, and if virtual, same virtualization platform, same guest tools version, same network card type (both -say- E1000 or VMNetx version) and the like. If you have time and if it is possible, introduce the redundant system in the production system to see if everything is working as expected. For example in a remote desktop server farm, I have 3 servers more than needed so I can make changes and if anything goes wrong, I immediately remove servers. Document everything from start to finish and identify all the components involved: you can discuss the procedure with your colleagues in detail and have their inputs, and if anything goes wrong everybody can follow your steps (more on that later).

RELATED: Google Apps vs. Office 365: How Will You Choose One over Another?

Even if you have set everything up correctly and your change went without any problems, wait at least a couple of days, preferably a full week to ensure that the change is successful. Data center is a complex environment and it is sometimes impossible to predict what a small change can affect many things in the background.

For patch management, implement a central patch management solution. This can be Windows Server Update Services, System Center Configuration Manager, Microsoft Group Policy, Dell KACE or a similar method. You will be able to control the updates centrally, get a holistic view of the update process, and make sure that updates are downloaded once.

After all these steps, even if everything went perfectly, wait until the old server is really really obsolete. Never assume that after a successful change everything is done and the old server can be decommissioned. Even if you have decommissioned the server, wait for some more time. I cannot count how many times I need to boot the server after decommissioning it and how I tried to pull data or configuration from a server that’s computer account is deleted from the Active Directory. Pull the plug when you are definitely, absolutely sure that the server is no longer needed. You may not know what waits you down the road.

Always have multiple inputs, more people you ask more gaps you find out. Remember how I advised you to document your change in detail? Use this document to discuss with your colleagues. Ideally this document should be as thorough as possible to fill all the gaps but of course we are humans and may have left out something. Plus, different people will have different points of view, and they may tell you things that you did not notice and sometimes will give you completely different ideas. When finished, you can prepare yourself a checklist and see if everything fits in your test system. Update your checklist with your experiences in your tests and discuss again. Repeat this until everybody is comfortable with the change. You cannot imagine how many headaches this process will save you from. (… and one more thing: don’t skip any items on the checklist, don’t try to cut steps, Murphy will catch you.)

RELATED: Is Your Infrastructure Ruining Your Business?

What will you do if your change is not successful? How will you roll back changes? How will you ensure that the business is not interrupted? You need to have a solid backup plan which is as detailed as possible as the change plan. Have the same steps: prepare the document, discuss, revise, test if possible. Think about all the options in the backup plan, and if you ask me, go creative: take snapshots, export registry keys, have system information in a text file, prepare drivers etc.. If that unfortunate moment comes, you will see how important all these are.

Of course you have to schedule your plan wisely. If you are working for a retail company with peak sales during the weekends, then you cannot schedule the change in Friday evening. Conversely, if you are working for a marine port, you have to find a time spot according to the ships. Suppose that you scheduled your change for the Sunday evening at 2000 and you expect the change to take 4 hours. What if everything stops working after 2 hours? Will you have enough time from Sunday 2200 to Monday 0830 to make sure everything is up and running? Make sure that you speak with all the people – users, stakeholders, colleagues – and choose your change schedule accordingly (… and one more thing: if you schedule it on Friday evening don’t forget to check the results during the weekend. I did this many times only to come on Monday morning to see messed up systems.)

RELATED: Digital Realty Trust Honored by Datacenter Dynamics

Prepare all these and present to the managers, owners, VPs, whoever involved and ask for their approval. This process will let everybody know that some serious and risky business is going on with the systems, will ensure that everybody has approval and has support. Don’t try to be the hero and go all on your own. Make sure that everybody is carrying the risk somehow and if anything fails, people are informed and will be prepared to provide the support you need. Then you can go on with your work.

We, IT people, are always under pressure to rush things, finish the task at hand, move on to the next and keep the systems as updated as possible. I advise you to be a little conservative, test everything as much as possible, and play safe. This way of working will make a good track of yourself over time. When the time comes to take a risk, people will say that you had to take it because you had to, not because you are someone who loves to play risky.

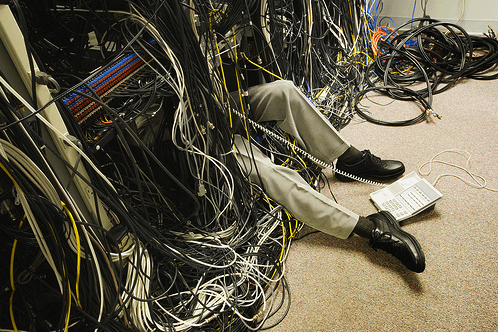

Image credit: datacenterandcolocation.com